Bodies of days gone by: Russians attacked with deepfakes from 2016

In January, a new wave of deepfakes appeared in Russia and the world, generated on the basis of photos and videos taken in 2016, which were massively posted on social networks. To produce plausible fakes, fraudsters collect publicly available materials using special programs. According to experts, there are no digital traces on such archived images, which can usually be used to identify forgery, so they are especially convenient for intruders. As a result, deepfakes of explicit content are created. Experts warn that the number of such fakes may increase dramatically in the near future. Ten years ago, AI technologies were not yet used, so old photos and videos are considered "clean" and are ideal for creating falsifications.

How messenger users are deceived

A wave of publications on social networks with photos and videos taken in 2016 led to the emergence of a new kind of deepfakes, cybersecurity experts told Izvestia. Scammers use archived images to create fake compromising videos — the so-called deepfakes from the past. Previously, the source materials had to be specifically searched for, but now users themselves make them publicly available.

"It is enough for parsing systems (automated programs for collecting data from websites) to extract images from social media pages in order to obtain material for forgeries," explained Alexander Parkin, head of VisionLabs research projects.

According to Igor Bederov, the founder of the Internet Search company, the problem of deepfakes has already become widespread. Every tenth Russian has experienced fraud attempts using them, and the total damage from cybercrimes in the first seven months of 2025 reached 119 billion rubles, which is 16% more than a year earlier.

"Access to archived photos can lead to a sharp increase in such attacks,— said Igor Bederov.

According to his estimates, in the coming months, the number of crimes using deepfakes may increase 5-10 times, and the proportion of cases with direct financial damage will also increase.

Sargis Shmavonian, an information security expert at Cyberprotect, confirmed this forecast, noting that there are no accurate statistics on deepfakes, since many cases are not reported to law enforcement agencies.

—The tools for creating deepfakes have become available, and the "raw materials" for them are massive, so a sharp increase in such crimes looks quite realistic," the specialist emphasized.

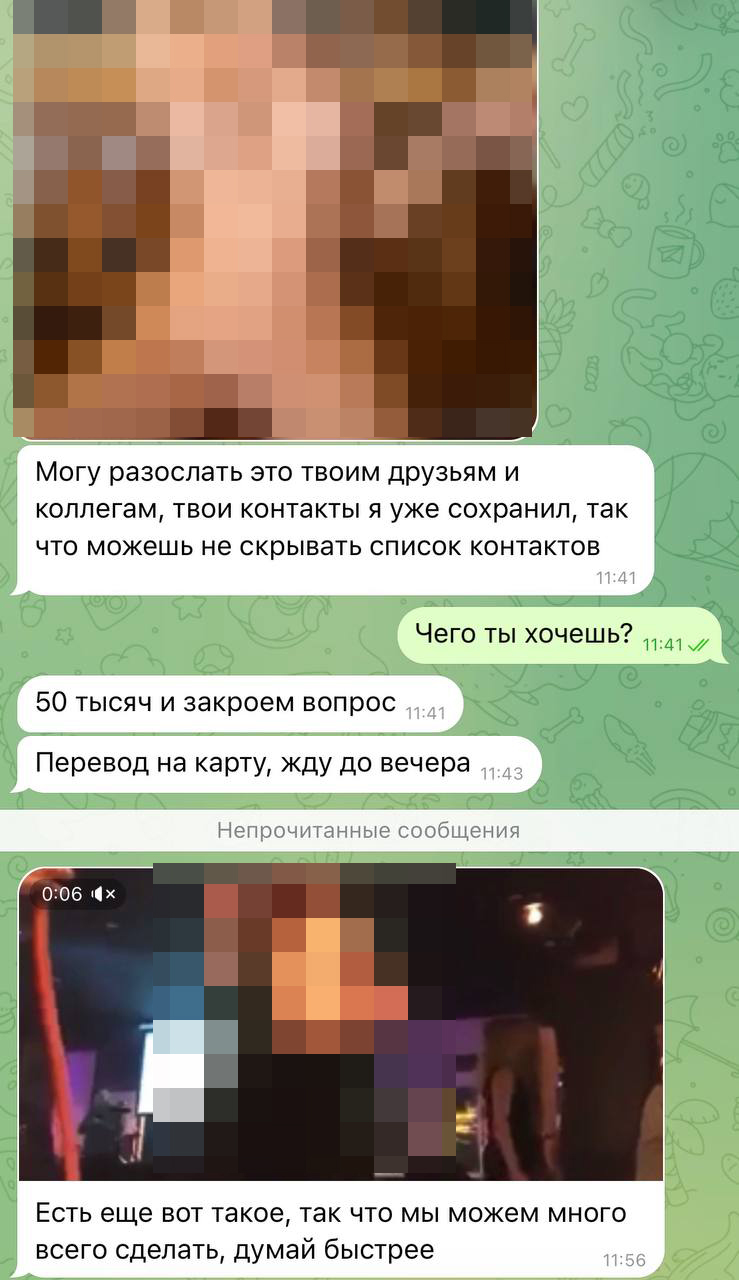

Izvestia got acquainted with user complaints received by the hotline at the Regional Public Center for Internet Technologies (ROCIT). In January 2026, people reported deepfakes on Telegram and channels spreading defamatory information. One of the victims, Anna from Nizhny Novgorod, shared that she had received intimate videos with her participation and threats to spread them further. According to her, on the recordings she looked much younger than her age.

— I just blocked the sender, complained about the content and restricted contacts with strangers. After that, there were no new attempts at blackmail," she said.

From a legal point of view, the actions of fraudsters fall under the articles of the Criminal Code, explained lawyer Igor Dolgopolov. First of all, we are talking about Article 159 of the Criminal Code of the Russian Federation ("Fraud"), as well as, depending on the situation, violation of privacy.

— There is no separate classification of deepfakes into "old" and "new" in the legislation, — he specified.

Where to find potential victims

This type of fraud is currently at the stage of data collection, so most cases are not yet classified into a separate category and are taken into account in the general statistics of crimes using AI. Thousands of attacks can be carried out now, but the real scale of the problem will become noticeable in the coming months, when the accumulated archives will begin to be massively used for personalized blackmail and deception, explained Marina Probets, an Internet analyst at Gazinformservice.

— The flash mob "2016", which is now actively spreading on social networks, can be used by attackers to combine old and current images. This helps to refine facial features and improve the quality of fakes. The danger lies not so much in the flash mob itself, but in the rapid development of AI, which simplifies the creation of convincing fakes," said the head of the digital threat analysis and assessment department at Infosecurity (Softline Group) Maxim Gryazev.

In the coming years, the number of deepfakes will grow many times — up to five times a year, according to MWS AI. The company notes that in Russia there have already been many cases of creating explicit deepfakes, when an image of a person's face taken from a photograph is superimposed on real videos.

According to Lyudmila Bogatyreva, head of the Polylog agency's IT department, services for creating such effects are already widely available, including directly on social networks.

"People are trusting video content less and less, realizing that it is easy to fake it," she noted.

Old photos and videos can be used to create a fictitious compromising past, from fake personal stories to defamatory statements about a person's views, according to Alexey Drozd, head of the security department at Serchinform.

At the same time, not all experts consider flash mobs to be a key threat. According to Dmitry Anikin, head of data research at Kaspersky Lab, the risks of using such images to train neural networks exist, but they are not critical.

— Modern algorithms can accurately determine the age of images, and for this they do not need mass publications of old photos, — said the expert.

Experts agree on one thing: it is important for users to remain digitally cautious and attentive to what content they make public. Any data once posted online can be reused — and not always in the expected context.

Переведено сервисом «Яндекс Переводчик»